Though recent trends have seen a growing interest in 3D complex cell models, 2D cell monolayer models remain a cornerstone of cellular based research due to well-established and reproducible protocols. And yet, though cell imaging techniques for 2D models are widely accessible, it has remained an open question how to best analyze and make scientific conclusions from those images, even in industry circles. Here, Tim Jackson, Senior Image Processing Engineer, BioAnalytics and Rickard Sjögren, Senior Scientist and Machine Learning Team Lead, Corporate Research, both at Sartorius, explain how LIVECell, a new resource captured on the live-cell imaging platform Incucyte and designed to enable deep learning-based image analysis, can help companies build their understanding of the cell behavior and morphology.

Why is live-cell imaging so important?

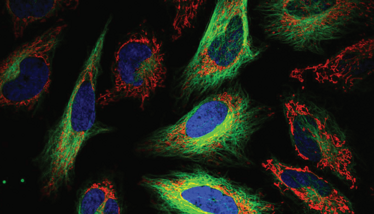

Most researchers agree that live-cell imaging provides a promising approach for cell biology research thanks to its high resolution both in space and time. In particular, label-free imaging avoids many of the challenges of fluorescence imaging. But the images produced are much harder to analyze due to low contrast and complicated cell morphologies. To gain detailed insight into individual cell behavior, we need to segment images to localize each cell – a task that is incredibly hard to do based on label-free microscopic images.

Deep learning-based segmentation using artificial neural networks provides a promising way of segmenting complicated images.

Is pharma receptive to deep learning platforms?

Data-driven analysis in the drug development space is not new. For instance, chemometrics (extraction of data from chemical systems) has been used to perform quantitative structure-activity relationship (QSAR)-studies for decades. However, when technological advancements allow for instruments to collect more and increasingly detailed data, analytic methodologies must also evolve.

Deep learning and its use of artificial neural network (ANN) models have enjoyed popularity in recent years due to their astounding capability to model complex relationships when given large enough training datasets. Notably, in the past decade, we have seen a surge in unstructured data such as images, videos, and text in contrast to structured data that comes in the form of tables. Deep learning-based analysis is particularly well suited for analyzing such unstructured data in order to transform it into hard numbers.

How can deep learning technologies help improve the drug discovery process?

Live cell imaging and analysis approaches can be used to identify and outline individual cells, measure cell culture confluence, quantitatively describe the morphology of individual cells, assess the health of individual cells based on their morphology, and much more.

When using deep learning technologies, more data simply means better systems. In the past, the largest dataset of label-free images available to researchers consisted of 4,600 images derived from 26,000 cells. New imaging types are certainly more diverse. When making LIVECell publicly available, we aimed to push the boundaries by including more than 5000 label-free phase-contrast microscopy images of more than 1.6 million cells.

The diversity of cell types and confluence conditions captured and annotated in LIVECell overcomes many of the challenges companies face by facilitating the training of deep learning-based segmentation models. Researchers now have an unprecedented, high-quality label-free segmentation resource and starting point for training neural networks. Due to the nature of neural network-based algorithms being orders of magnitude more complex than traditional image analysis, this data set will allow for more robust segmentation of various cell morphologies and ultimately minimize user-introduced biases.

Credit: commons.wikimedia.org

Are there applications of deep learning that the industry has yet to explore?

Definitely. The ability to derive physiologically relevant data from label-free microscopy images is a cornerstone of pharmaceutical research and datasets containing images of millions of cells facilitate exploration of biological phenomena with great statistical power. To compensate for a lack of image resolution, however, sophisticated imaging processing pipelines are necessary to generate the accurate cell-by-cell, pixel-by-pixel segmentations necessary to capture subtle changes in cell size, shape, and texture, particularly if the goal is to investigate events at the level of cellular subpopulations or individual cells.

Though neural networks can learn and adapt to identify and segment a variety of cells, they first require training with high-quality datasets representative of the breadth of the cell morphologies to be encountered. Achieving accurate segmentation in microscopy images is essential for quantitative downstream analysis but is a challenging task. Traditional image analysis methods often require tedious algorithm customization and rigorous tuning of parameters specific to the cell morphology of interest.

Though there has been exciting progress in the research community in imaging of such complex structures, both label-free and fluorescence-based, there is still much progress to be made and the adoption in industry is still in its infancy.

What’s next for LIVECell?

Even though LIVECell provides a very large and rich resource for live-cell imaging, opportunities to improve it remain. During our work with LIVECell, we quickly noted that certain cell types were much more difficult to segment than others. Neuronal cell types, with their protrusive and non-convex morphologies, were particularly challenging. To assess this issue, we assembled a new dataset targeting neuronal cell types specifically. We then decided to host a machine learning competition on Kaggle, the largest platform for such competitions, to segment these neuronal cell types. This competition has recently finished, and we are thoroughly impressed by the community’s openness and ingenuity. It’s exciting to witness the field’s progress.

Credit: commons.wikimedia.org