Pass Me a Bottle Opener

Upstream processes in biopharma manufacturing are growing ever more efficient; conversely, downstream processing is increasingly a bottleneck. Can a new generation of chromatography techniques and technologies get things moving again?

Biopharmaceutical products are manufactured by producing a synthetic or recombinant peptide or protein (upstream processing), purifying and preparing the appropriate pure active ingredient salt form (downstream processing), and then formulating the active ingredient. Historically, upstream processing limited final manufacturing yield and efficiency, but the tide has turned in recent years. Improvements in recombinant production titer and synthetic peptide starting materials have increased upstream yields and instead turned downstream processing into the major bottleneck. In this article, I’ll guide you through the pressure points in downstream processing and look at emerging technologies that are clearing the way.

First of all we need a definition. The definition of a biopharmaceutical product varies, but for this article we’ll assume that the term encompasses recombinant proteins from living biological sources, such as antibodies or erythropoietins, plus synthetically produced molecules, such as larger peptides, that are sizeable enough to have a secondary structure. Downstream processing may account for up to 80 percent of production costs for these products and the downstream processing equipment market is worth $5 billion per year (1, 2). Increasing the efficiency and output of the process is clearly a priority for all companies in this space.

Whatever the product, biopharma manufacturing companies face similar challenges when it comes to increasing the efficiency of downstream processing:

- Legacy plant design issues

- Capacity of downstream equipment

- Cost and capacity of chromatographic resins and capture steps

- Recent emphasis on quality by design (QbD) and predictive tools

- Increased titers upstream, resulting in disproportionately higher impurity levels

- One-size-fits-all platforms (for example, for antibody purification)

- Time of operations

- Limited options for disposable equipment

- Cost of membranes

- Cleaning and validation costs of downstream equipment

- Expensive chromatography media and filters.

It’s a daunting list. The good news is that with so much attention focusing on this area, solutions are beginning to emerge that may help manufacturers reduce costs and increase throughput.

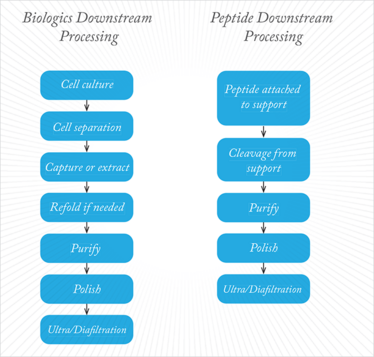

Figure 1 shows the main downstream processing steps. A whole range of techniques can be used, including centrifugation, pH adjustment, filtration, size exclusion, chromatographic purification and polishing and buffer/salt exchange by ultrafiltration/diafiltration. But there is no question that the workhorse of downstream processing is chromatography, and this has historically been the main target for efforts to boost efficiency.

Figure 1. Generalized diagram of downstream processing steps for biologics and larger synthetic peptides.

Knowledge is power

For years, regulatory authorities have been implementing initiatives using principles defined in QbD that aim to increase the quality of drugs by identifying critical quality attributes of the product and process parameters for the manufacturing processes. If we apply these principles to chromatographic purifications, we can use mechanistic models to better design and understand the processes. These models may be built on molecular properties, molecular interactions, chromatographic resin properties, hybrid approaches (a combination of experimental and mathematical models), or simply trial-and-error (3).

The properties of the molecule and its molecular interactions with the chromatographic resin are grounded in the structure of proteins. As a refresher, the primary structure of proteins consists of chains of amino acids, which may associate via hydrogen bonds, salt bridges, disulfide bonds or post-translational modifications into secondary, tertiary or quaternary structure. The properties created from these sequence and structure characteristics affect the interaction with the resin, and can be used to predict chromatographic behavior and, thus, optimize the process.

Model behavior

New process design tools – statistical and mechanistic models that incorporate elements of design of experiments (DoE) or QbD – also add to our understanding of what happens downstream. A good example is a study modeling lysozyme and lectin separation using hydrophobic interaction chromatography, where the authors developed mathematical formulae to predict displacement and gradient protein separation behavior (4). Molecular properties and interactions may also be used to design process development strategies. For example, the molecular weight, isoelectic point (pI) and hydrophobicity of proteins can all be used to model retention times of a protein mixture in ion-exchange chromatography, making the behavior of the product more predictable (5).

Resin technology and automation

Improvements are continuously made in packed bed chromatography resins to increase efficiency and output, including improvements in binding capacity and increased flow rates, as touted by various resin manufacturers. Alternative approaches to standard reverse-phase, ion-exchange, or size-exclusion chromatography are also being explored, including simulated moving bed chromatography (SMB). SMB has been available for over a decade, but is increasingly gaining favor for its increased productivity, reduced footprint, the ability to recycle buffers, and possible automation. A recent publication used SMB to refold and purify recombinant protein in a continuous process, demonstrating up to 60-fold higher throughput, 180-fold higher productivity and 28-fold lower buffer consumption, in comparison to a linear batch process (6). SMB is not well equipped to separate complex mixtures, but adding more zones to SMB via multi-column solvent gradient purification continuous processing (MCSGP) adds the capability. MCSGP is a developing technology has been shown to increase purification productivity and yield, but the conditions are often not optimized. Several groups are exploring ways to model and control separation performance in an effort to optimize and automate a process that would meet the requirements for both productivity and product purity specifications (7,8).

On a lab or clinical scale, exciting new technologies like automated robocolumns can be used for high-throughput process development, which can help relieve bottlenecks by reducing process development time at the drug candidate stage or cutting labor costs. For example, a study was completed demonstrating successful comparability of small-scale automated robocolumn processing with large-scale processing with a variety of resins (9).

Throwaway chromatography

Single-use or disposable plastic equipment may offer greater flexibility and efficiency than traditional steel and glass systems, but disposable chromatography equipment has yet to take off in a big way, largely due to high resin costs. High-binding and high-capacity resins, which achieve the most efficient and pure separations, come at a high cost, and many biologic manufacturers are looking to increase the ability to recycle resins rather than dispose of them. Others are using the resin a few times, rather than “single use.” Despite this, the market for disposable packed beds is growing, with up to a 15 percent increase expected in 2014 (10).

Anything but chromatography

We have looked at various ways to speed up chromatography, but what if we could bypass it altogether and replace it with something else? This concept – sometimes referred to as ‘anything but chromatography’ (ABC) – is an attractive one for companies. Various alternative separation methods have been proposed, including precipitation and high-performance tangential flow filtration (11). Another replacement separation technique, aqueous two-phase partitioning, uses two mostly water-based phases, which contain, for example, a polymer (e.g., PEG) or a salt (e.g., phosphate) to separate an antibody by “salting out” (12). Two-phase partitioning has the potential to reduce cost, increase capacity and overcome the limitations of diffusion that can occur in chromatography.

These are just some of the developments that are opening up the downstream processing bottleneck. With the regulatory emphasis on QbD and the economic imperative to do more with less, it’s clear that we will need further research and development – and some creative thinking. I’d like to end by handing the baton over to you: what’s your solution?

- Baden-Württemberg GmbH.

- E. Langer, “Alleviating Downstream Process Bottlenecks”, Genetic Engineering & Biotechnology News 31, 13 (2011).

- A. Hanke and M. Ottens, “Purifying Biopharmaceuticals: Knowledge-Based Chromatographic Process Development”, Trends in Biotechnology 32 (4), 210-220 (2014).

- D. Nagrath et al.,” Characterization And Modeling Of Nonlinear Hydrophobic Interaction Chromatographic Systems”, Journal of Chromatography A 1218, 1219–1226 (2011).

- L. Xu and C.E. Glatz, “Predicting Protein Retention Time In Ion-Exchange Chromatography Based On Three-Dimensional Protein Characterization,” Journal of Chromatography A 1216, 274–280 (2009).

- M. Wellhoefer et al., “Continuous Processing Of Recombinant Proteins: Integration Of Refolding And Purification Using Simulated Moving Bed Size-Exclusion Chromatography With Buffer Recycling”, Journal of Chromatography A 1337, 48–56 (2014).

- C. Grossman et al., “Multi-Rate Optimizing Control Of Simulated Moving Beds”, Journal of Process Control 20, 618-629 (2010).

- M. Krattli et al.,“Online Control Of The Twin-Column Countercurrent Solvent Gradient Process For Biochromatography”, Journal of Chromatography A 1293, 51-59 (2013).

- N. Fontes et al., “Downstream processing of biopharmaceuticals: current approaches and trends”, AAPS NBC presentation, May 20, 2014.

- E. Langer, “Disposable Chromatography: Options Are Increasing,” Genetic Engineering & Biotechnology News, 10 December, 2013.

- P. Gagnon, “Technology Trends In Antibody Purification”, Journal of Chromatography A 1221, 57–70 (2012).

- A. Azevedo et. al, “Chromatography-Free Recovery Of Biopharmaceuticals Through Aqueous Two-Phase Processing”, Trends in Biotechnology 27 (4), 240 – 247 (2009).

Jaime Marach received her doctorate in Biomedical Sciences from the University of California, San Diego, in which her thesis centered on the manipulation of protein sequences, their expression in bacteria, insect and mammalian cells, purification of protein, and enzymatic characterization assays. She subsequently worked in product and process development for branded and generic pharmaceutical companies, with her projects including the synthesis and downstream processing of large peptides, and the development and validation of analytical methods to characterize small and large molecules.