Deep Dive into Biopharmaceutical Analysis

Advanced tools across a number of analytical techniques are helping medicine makers better understand their biomolecules – ensuring both safety and efficacy.

Why is deep biopharma characterization so important for the discovery, development, and manufacture of new biologic drugs?

Anurag Rathore: The importance, as well as significance, of characterization for biopharma arises from the complexity of the product. Biotherapeutics are complex nano-machines, designed to work at a specific rate, for a specific function. This specificity can only be assured if all the parts of the nano-machines are intact and aligned accurately. For this, it is important to first understand how different stresses impact the assembly. Moreover, as it is a product used in bulk (millions of molecules per dose), the range of contaminants and their effect on product function will vary.

Characterization helps define all of the above features in minute detail – and this understanding can then be used in all aspects of development and manufacturing as a signature of the molecule’s behavior. In the drug discovery phase, anomalies identified during characterization of a biotherapeutic for a certain target might also help identify treatments for other disorders. Characterization to some extent also helps understand and manage the risk involved with manufacturing, and can help alleviate the cost attached to clinical trials. In my opinion, there are very few industries where quality of the product matters so much to the consumers.

Ultimately, regulation of this quality comes down to efficient and accurate characterization.

Koen Sandra: Anurag summed that up very nicely. Biopharmaceutical products come with enormous structural complexity. The molecules are large (monoclonal antibodies have a molecular weight of 150,000 Da) and heterogeneous as a result of the biosynthetic process and subsequent manufacturing steps and final storage. Despite the fact that typically only one product is cloned, the final drug substance or drug product is composed of a mixture of hundreds of variants that differ in post-translational modifications and higher order structure. These different variants can have an impact on function, stability, efficacy, as well as safety. During development, these characteristics need to be determined in great detail using state-of-the-art methodologies and closely monitored prior to clinical or commercial release. For that, a wide range of analytical techniques and methodologies must be used.

What analytical advances have had the biggest impact in terms of developing biologics?

AR: The field of analytical characterization of biotherapeutics has definitely been a recipient of major developments in the last decade; there are two significant advances I would highlight.

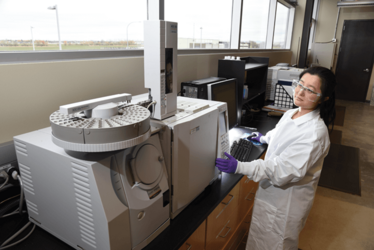

The first is mass spectrometry (MS). When hyphenated with separation tools such as electrophoresis and chromatography, MS has made it possible to probe the molecular structure of complex biomolecules in previously uncharted ways. Combinations such as LC-MS-MS (liquid chromatography-tandem mass spectrometry) allow us to accurately identify the mass of a molecule to the fifth decimal place and pin-point not only the type but also the exact location of a range of chemical and enzymatic modifications. Even modifications as complex as glycosylation are now being increasingly profiled using characterization tools. If there is a modification that can be separated via a specific mode of chromatography, it can be identified by mass spectrometry.

The second set of tools that are becoming increasingly promising are surface plasmon resonance (SPR) and biolayer interferometry (BLI). These tools have made it easier to perform binding assays and have significantly boosted productivity. They are gradually becoming the industry gold standard for measuring drug specificity and kinetics.

Kyle D’Silva: I agree that MS is one of the biggest advances. MS has given drug manufacturers a greater level of structural insight into their products than any other technique in recent years. The ability to hyphenate charge-based separations, such as ion exchange chromatography, with MS also enables manufacturers to better understand protein structure and protein-protein interactions in the native form, delivering a deeper understanding of the drug and its mode of action.

KS: New mass analyzers have been introduced with improved robustness, sensitivity, resolution and mass accuracy. Today, you can use MS to study primary structural features, such as amino acid sequence and post-translational modifications, as well as higher order structures. All of this results in enormous amounts of data for which new powerful software tools have been developed. However, it is important to point out that, despite the significant progress made in software algorithms, data analysis still requires substantial manual intervention. Interpreting all the different spectra to this day remains somewhat of an art, and finding people with the right expertise is very challenging.

Many advances have also been made in chromatography, such as the introduction of highly efficient columns (with chemistries tailored towards the analysis of biopharmaceuticals) and instrumentation capable of successfully operating these columns. Separations nowadays are even performed in multiple dimensions to gain in resolution – two-dimensional liquid chromatography (2D-LC) is a good example.

Looking back to the characterization of the first recombinant therapeutic protein (insulin) in the late 1970s/early 1980s, chromatography and mass spectrometry were of modest performance compared with the current state-of-the-art. Though fast atom bombardment was used to introduce insulin into low resolution mass spectrometers, today the Nobel Prize awarded technology, electrospray ionization, has become the standard to introduce small peptides and large proteins into high-resolution mass spectrometers equipped with a variety of fragmentation modes, providing sequence information and allowing modifications to be detected and localized at very low levels. HPLC separations used to be performed on columns packed with 5-10 µm porous particles and pumps operated at 400 bar, but we now have sub 2 µm porous and superficially porous particles and system pressures up to 1500 bar, allowing us to resolve minor structural differences in a short analysis time.

There was a time when scientists had to identify all peaks in a peptide map using Edman degradation – a very lengthy task – but now we can easily acquire and process 24 peptide maps a day thanks to the many developments in chromatography, mass spectrometry and accompanying software tools.

Anurag S Rathore

Anurag is familiar with both academic and industry perspectives in biopharma characterization. Today, he is Professor in the Department of Chemical Engineering at the Indian Institute of Technology in New Delhi, but he has previously held roles at Amgen and Pharmacia Corp. His main areas of interest include process development, scale-up, technology transfer, process validation, biosimilars, continuous processing, process analytical technology and quality by design.

Kyle D’Silva

Kyle offers insight from the point of view of a technology provider. Following a PhD in applied ultra-trace mass spectrometry, he held roles as a mass spectrometrist and applications chemist before deciding to move into product marketing at Thermo Fisher Scientific. Today, he focuses on technologies for both pharma and biopharma applications.

Koen Sandra

Koen is currently the Scientific Director of the Research Institute for Chromatography (RIC). He is also the co-founder and co-owner of anaRIC biologics and of Metablys, an institute performing metabolomics and lipidomics research. As a non-academic scientist, he is the author of over 40 highly cited scientific papers and has presented his work at numerous conferences as an invited speaker.

Hermann Wätzig

Hermann has spent his career in academia and is today Professor at the Technische Universitat Braunschweig in Germany. Since 2001, he has been the chair of the pharmaceutical analysis/quality control division of the German Pharmaceutical Society. He is a scientific committee member of Germany’s Federal Institute for Drugs and Medical Devices (BfArM) and an expert of the European Pharmacopoeia.

Hermann Wätzig: We are constantly improving our understanding about the quality of the biologics being produced and how aspects such as charge variance and size variance play an important role. I think this is mainly because of chromatography and electrophoresis – (U)HPLC ((ultra-)high-performance liquid chromatography) and capillary electrophoresis in particular. These technologies continue to deliver better separations. MS, of course, is a much newer technology; many interesting things are happening there and I must admit that it continues to surprise me! Chromatography and electrophoresis are older techniques that are very well understood so naturally the advances are smaller – but still very important.

How has biopharma characterization affected the development of biosimilars?

AR: Analytical technologies have made the cost of biotherapeutic production more manageable. Newer guidelines for biotherapeutics across the globe seem to be highlighting the trend of increased reliance on detailed characterization as opposed to clinical trials, which has direct repercussions in terms of drug costs – the reduction in cost of clinical trials allows for cost-effective pricing of the final product.

KD: Advances in analytical techniques enable biosimilar manufacturers to identify potential product differences compared with the reference innovator product that may affect the purity, safety, and efficacy of the biosimilar candidate. It is incumbent upon biosimilar manufacturers to exhaustively characterize both the innovator molecule together with their own biosimilar version. Modern analytical technologies can provide biosimilar manufacturers with even greater knowledge about the microheterogeneity of an innovator biologic than the reference product manufacturer themselves.

KS: Regulatory agencies evaluate biosimilars based on their level of similarity to the originator. In demonstrating similarity, an enormous weight is placed on analytics – and both the biosimilar and originator need to be characterized and compared in extensive detail. The analytical package for a biosimilar submission is considerably larger than that of an originator. During the development of an originator product, the major goal is to show a clinical effect, but for a biosimilar developer the goal is to demonstrate similarity. The structural differences highlighted define the amount of clinical studies required. When biosimilar developers re-characterize blockbuster products developed 20 years ago using the current state-of-the-art analytical tools, many more details are revealed that pose enormous challenges to position a product within the originator specifications.

What are the biggest discussion points in biopharma characterization? Where are there clear gaps or unmet needs?

AR: We have come a long way in understanding protein molecules as products – but this understanding has also led us to appreciate the limitations of our knowledge. When we talk about “quality attributes,” there are some cases where an understanding of the “cause and effect” is still lacking. In most cases, these gaps in our understanding are because of current technical limitations, which I am certain will be resolved in the near future. One example is aggregation; there are already established immunogenic effects of the presence of this class of contaminant, making it a Critical Quality Attribute (CQA), but we still need to understand, in greater detail, the specific effects of individual aggregate species on immune profiles. The mechanism of anti-drug antibody formation is poorly understood; whether the response pathway is generic to aggregates or species specific still needs to be resolved. Understanding this would greatly help in defining specific ranges for this class of contaminants. It would also help in predicting drug behavior more accurately during storage conditions and, ultimately, the quality of the product at the time of patient-administration.

A similar gap exists in our mapping of the glycan profile of complex biomolecules, such as monoclonal antibodies. Given the wide range of possible combinations of glycans that can attach to the antibody backbone, complete profiling of these variants becomes a technical challenge. Moreover, given the acute sensitivity of biotherapeutics to their environment, it becomes even harder to ascertain how true a given profile is and what changes have been introduced because of the analysis itself.

KD: One of the key trends we see discussed is the advancement of MS from the development arena further down the product pipeline into manufacturing and quality control. Here, we see a great desire for companies to consolidate several chromatographic characterization tests that monitor for CQAs, into a single multi-attribute-monitoring workflow using high resolution accurate mass MS in the quality control lab. Based on a peptide mapping approach, such tools enable the parallel monitoring of several CQAs in a single run, meaning that several orthogonal methods can be replaced by biotherapeutic quality control.

HW: I see room for improvement in terms of the setting of proper specifications – at the moment, I feel as if there are compromises. Biopharma products are incredibly sophisticated and widely available to patients in different therapeutic areas – but because the product is so sophisticated, there are still byproducts. It is not completely understood which of these byproducts will give unwanted side effects and which will not. And right now I think there is a compromise on the amount of impurities that are allowed in a biopharma product.

For example, there may be an impurity specification of eight percent for a biologic versus one or two percent for a small molecule drug. Gradually over time, eight percent should perhaps drop to five percent, and then three percent and so on. The need for lower levels of impurities will drive further advances in analytical and purification technologies.

The specifications are set via the ICH – which does a very good job – but there are a lot of mutual recognition agreements about which specifications are necessary versus those that are actually obtainable. Once again: it is a compromise. One would really love to have tighter specifications, but everybody understands – myself included – that it may be too difficult at the present time.

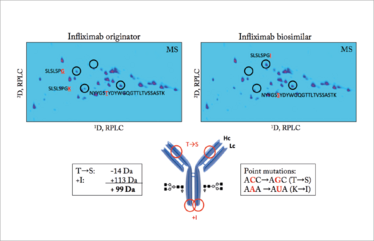

The power of advanced analytical tools. RPLC×RPLC-QTOF-MS analysis of infliximab originator and candidate biosimilar. Drawing of the mAb with annotation of the modifications. Courtesy of the Research Institute of Chromatography (www.richrom.com).

Have you noted any different trends or priorities in industry as opposed to academia?

AR: Academia and industry research goals are mostly well aligned to each other with their primary focus on societal welfare, but the priorities of the two are not always superimposed. Academic research is driven by the impact it will have on the global research community, and the measures of success are largely based on publications. Industry, on the other hand, is driven by the impact it will have on day-to-day activities, and the measures of success are largely based on the long- and short-term value it creates for the company. Though the industry aims to comply with the regulatory guidelines for approval, academicians constantly look to evolve any set norms. For example, a decade ago, charge heterogeneity of a monoclonal antibody based therapeutic was not considered to be a CQA, as the acidic and basic variants were believed to have the a similar safety and efficacy profile as the main product. However, after numerous interesting publications from both industry and academia, charge heterogeneities are now considered significant to a product’s safety and efficacy.

KD: Industry and academia actually make very effective partners. There is certainly a trend for large bio/pharma manufacturers to outsource discovery to academia, which is full of new thinking, and we see greater migration of pharma research hubs around academic sites, such as those in Cambridge, MA (USA), and Cambridge, UK, because of the availability of knowledge. To ensure success though, I think it’s important to use a bridging organization that has a keen understanding of both languages and the needs of academic and industrial partners – because ultimately both parties are very different! One good example is the charity LifeArc in the UK – they act as a keystone in the bridge to spanning the divide between academic research and drug developers. These types of organizations usually have the latest technology in drug characterization to ensure that quality is maintained as a concept moves from the academic arena to commercial drug development.

HW: As Anurag says, the goals of each side are ultimately the same, but I think there is more freedom in academia to try out new ideas and new techniques. Findings are very important in academia so you embrace the latest, sophisticated equipment for proper characterization to gain greater knowledge. Academia can also dwell on projects for longer than industry (where time is a constant pressure) and so can more thoroughly investigate a molecule and gain a deep understanding.

Many technological advances and new instruments offer increased sensitivity. Should sensitivity always be a priority?

AR: Manufacturers have been continuously challenged to develop analytical methods for timely and accurate product determination, as well as potential contaminants throughout the manufacturing process, from raw material selection to process analysis, formulation development, and release testing. Analytical technology advances that offer increased sensitivity and shorter analysis time are always welcome, but this is application specific. For instance, MS-based methods and next-generation sequencing are addressing greater sensitivity, dynamic range, resolution, mass accuracy, and user-friendliness in less time, and pharmacokinetic/pharmacodynamic analysis requires high sensitivity methods for detecting pico/nanomoles of target drug in the presence of multiple interfering compounds.

While researchers and equipment manufacturers are continuously pushing the bar on improving sensitivity, I feel where we lack in our understanding is how the different tools compare with each other – and which of them are redundant.

KD: Companies need to understand their products, but although sensitivity gives greater confidence in results, on its own it doesn’t deliver knowledge. Every step that takes the customer from sample to knowledge must be as simple as possible, including sample preparation, simplified acquisition, automated data processing and interpretation, and robust reporting of results. It is incumbent on instrument manufacturers to provide tools that deliver knowledge to the end user, not just performance. However, confidence in the result often comes from the foundation of high performance instrumentation and high quality data. Without this foundation, poor data quality can lead to misinterpreted results, with huge time and cost implications.

KS: Better sensitivity is not necessarily what biopharma companies want, but it is a consequence of the recent advancements in analytical tools. Today, it is remarkable that we can detect individual host cell proteins (HCPs) at 0.1 ppm levels and product variants at levels below 0.1 percent. In project meetings, we often hear the comment “we don’t want to know about all these low level variants” or “we hope you have not found new liabilities.” As analytical scientists, we feel it is our duty to reveal all the details of the molecules we are studying. At the HPLC 2016 meeting in San Francisco, Reed Harris (Genentech) showed an interesting graph plotting the number of modifications revealed in a molecule versus popularity within the project team. When discovering the first set of modifications, the popularity within the team increases substantially. After having shared yet another set of modifications, popularity declines – and at a certain point you are Doctor Doom because of the consequences that your findings can have on the timeliness of a project.

In the development of new techniques and technologies, I think priority should lie in robustness. We need to obtain the same results over and over again.

HW: Being from academia, my opinion is that sensitivity is always beneficial! Sensitivity allows you to see and understand more – and I think scientists from commercial biopharma should share this view. Sensitivity, however, is not the only important feature of a system – separation efficiency and robustness are equally important, depending on what you are trying to achieve. If you are looking for a certain minor component, you need sensitivity, but if you have a more complicated process that you are looking to control then you perhaps need separation efficiency. System reliability is also crucial. Interestingly, I think that standard analytical equipment can sometimes be more reliable than newer, sophisticated instruments. For example, I find that standard HPLC equipment can be a little more reliable than highly sophisticated HPLC, electrophoresis or MS systems. I am sure that all the instrument vendors are addressing this though – and most definitely there is considerable progress being made.

Could you explain the challenge of developing systems for real-time analysis during biomanufacture?

AR: One area that is ripe for future development is real-time monitoring of product attributes through all stages of development and manufacturing. Typically, the different manufacturers of process equipment and analytical equipment each use their proprietary software for equipment control. And that creates significant challenges when one tries to integrate the process and analytical equipment to get real-time information during manufacturing. Another major challenge is the mismatch between the time that is available for analysis and the decision making required during processing. For example, typical chromatographic elution occurs in 15-30 minutes, and a typical HPLC assay takes 30-60 minutes to do a single analysis.

KD: The complexity of biopharmaceuticals requires advanced technologies to analyze them. More technologies are now being developed with greater automation for routine process-analytical and quality control environments. Do we have high-resolution mass spectrometry sitting next to the bioreactor for real-time monitoring? Not routinely. But multi-attribute monitoring using high resolution accurate mass MS are already being deployed at scale in biopharma quality control departments, and the production environment of the near future will almost certainly be adopting such techniques too.

KS: This has everything to do with the complexity of biomolecules. Measuring oxygen levels, pH, and so on, can readily be performed using sensors, but studying the biopharmaceutical in situ demands more sophisticated chromatographic or mass spectrometric tools – which very often include tedious sample preparation. As an extreme example, monitoring glycosylation requires glycan release, labeling and chromatographic separation (eventually also incorporating a purification step). Various groups within the biopharmaceutical industry have, nevertheless, made enormous progress in real-time monitoring of CQAs directly from the process.

HW: Most definitely it would be very valuable to have more analytical data during processing, but this is not easy. To start with, there is the issue of fouling of the sensors or the sampling in biopharma production – how do you prevent carryover from one analysis to another? There are many basic challenges like this that must be solved before we can begin to implement analytical systems directly in production.

What emerging characterization tools have potential but are not yet routinely applied?

AR: Improvements in MS have dramatically improved our ability to obtain detailed protein molecular information. In addition, the continued development and deployment of such MS-based applications will enable finer control of bioprocess optimization, allowing for correlation of manufacturing process changes to both molecular structure and yield. Numerous hybrid MS-based analytical techniques, including ion mobility-MS, capillary electrophoresis-MS, hydrogen-deuterium exchange-MS (HDX-MS), and size-exclusion chromatography coupled to native MS are yet to make their way into routine use. Alternatives to conventional cell-based analytical methods and continuous processing requiring process analytical technology for real-time process monitoring are in the pipeline for implementation in industry. Also, real-time efficacy assessment platforms have been proposed (for example, CANScript technology), which I believe will greatly enhance effective biologic development.

KD: We too see phenomenal growth in HDX-MS, especially in areas such as biosimilarity studies from biosimilar manufacturers, but also innovator companies looking to protect their patents. Top-down or middle-down MS protein characterization is also showing great promise as a simple minimal or preparation-free method for confirmation of protein structure. Historically, the sequence coverage obtained from a top-down fragmentation experiment didn’t meet the demands for biopharmaceutical manufacturers, but with advancements in fragmentation methods we see great interest in this technique because it can achieve near-full coverage, providing confirmation of primary structure, localization of post-translational modifications and the intact or subunit mass of a biologic. Intact and subunit analysis of biologics is also becoming more information rich due to the coupling of chromatographic separations with MS, and the clarity and accuracy of intact protein mass spectra on the latest MS platforms.

KS: I think it is a very exciting time to be involved in biopharmaceutical analysis given the enormous advances in instrumentation. Mass spectrometry, the workhorse in R&D, is slowly finding its way into routine environments as a release tool. We also have high hopes for 2D-LC, where two different separation mechanisms are combined, with the aim of increasing overall resolution and thereby providing the next level of product detail.

HW: I expect considerable progress to come from automation, particularly sample preparation steps. Less error by dilution or extraction steps will certainly improve analytical precision. Miniaturization also has great potential to speed up analyses, and improve precision by multiple measurements and using the obtained average values as reportable results. Improved surface technologies can reduce the fouling of the analytical instrumentation, enabling the very much desirable process analysis of biopharma products and their impurity profiles during production and clean up.

Making great scientific magazines isn’t just about delivering knowledge and high quality content; it’s also about packaging these in the right words to ensure that someone is truly inspired by a topic. My passion is ensuring that our authors’ expertise is presented as a seamless and enjoyable reading experience, whether in print, in digital or on social media. I’ve spent fourteen years writing and editing features for scientific and manufacturing publications, and in making this content engaging and accessible without sacrificing its scientific integrity. There is nothing better than a magazine with great content that feels great to read.